The Systems Life Cycle Analysis

CHAPTER SYNOPSIS

In chapter three we began a general discussion of the first two major phases in the system development life cycle - analysis, examination and study. Because system development is a business function, and because it is rather complex, it stands to reason that its processes and activities should be organized into systems, and that there should be more or less formal procedures which describe in detail how the processes are to be performed.

Systems for the development of systems, as with all other business systems, must operate within a framework. The system development framework is called the system development life cycle. The processes and activities within this framework are usually performed according to a well defined and comprehensive sets of procedures called methodologies.

Introduction

Before we begin our discussion of the system development life cycle we should set some perspective.

Since most business activities involve the gathering, manipulation, presentation or dissemination of data or information, any system development life cycle must concentrate on those activities

Since many business procedures are or soon will be automated, or semi-automated, any system development life cycle must acknowledge, account and provide for automation considerations

The development of business systems is a business function and as such should be subject to the same management and controls as any other procedure. Its processes and activities should be performed within a framework and according to a well-defined set of procedures.

The development of business systems, as a business function, is subject to the same types of changes which affect other business functions, and the systems and procedures which govern how it operates are subject to the same change agents as any other business systems or procedures.

Analysis

Regardless of the level being addressed or the particular methodology employed, analysis must examine the current environment from a functional, process and data perspective.The aim of analysis is to document the existing user functions, processes, activities and data.

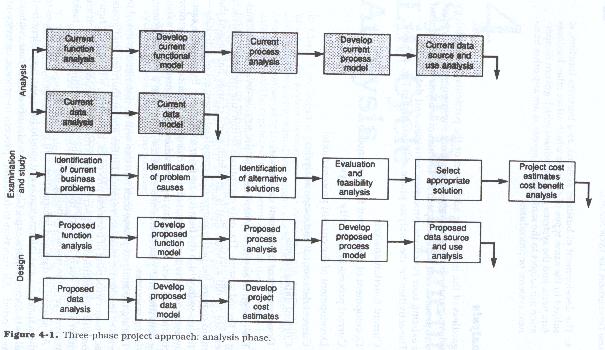

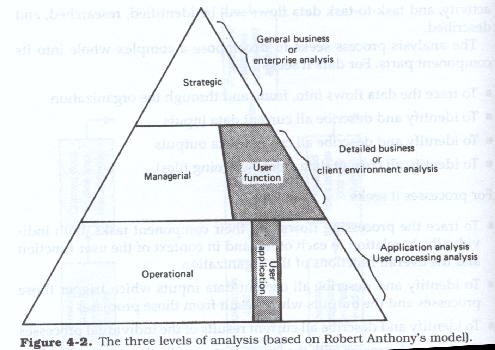

The activities for the analysis phase as illustrated in figure 4-1 are:

The design of any new system must be predicated upon an understanding of the old system.The only exception to this is where there is no old system. That is, where some change has caused the firm to require the performance of a completely new set of activities. Activities with which the firm has no prior experience. Even in this case however, no activities occur within a vacuum. Even completely new activities, and their accompanying procedures must interact with existing activities and procedures and must use, if only to a limited extent, existing resources.

During the analysis phase, each function will be described in detail in terms of its charter, mission, responsibilities and authorities, and goals.

Each major grouping of business processes, or system, within each function will be identified and described in terms of the processes which are within it.

For each activity there will be a description of the tasks involved, and the resources being used.

All sources of data and information will be identified and all uses to which that data and information is put should have also been identified. All data forms, reports and files will be identified and their contents inventoried.

All function to function, process to process, activity to activity, and task to task data flows will be identified, researched and described.

The analysis process seeks to decompose a complex whole into its component parts.For data it seeks:

For processes it seeks:

Multiple Levels of Analysis

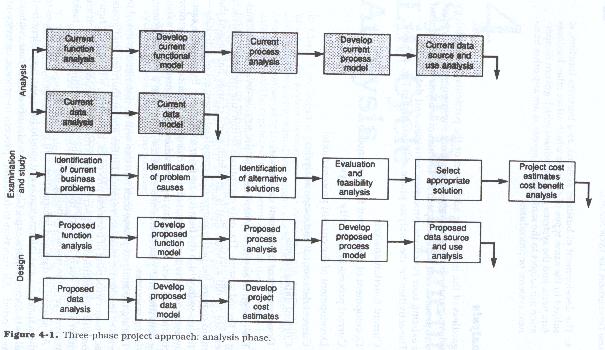

Given Robert Anthony's multilevel view of the organization (see Chapter 2 for a discussion of Anthony's model) and an understanding of the differing viewpoints and systems needs of each level within the organization, there is an implication that there are different types of analysis and perhaps even multiple levels of analytical activity. Any methodology must recognize this and allow for analysis with a multilevel approach.Although there can be many iterations of analysis, for practical purposes we usually restrict ourselves to three.(See Figure 4.2.)

The first is identified as the general or business environmental analysis level, and it concentrates on the firm-wide functions, processes, and data models and has been called by some "enterprise analysis." This type of analytical project has the widest scope in terms of numbers of functions covered and looks at the highest levels of the corporation. This level corresponds to the strategic level of the management pyramid.

The second is identified as detailed business or client environmental analysis and concentrates on the functions, processes, and data of the individual client or user functional areas. This type of project is narrower in scope than an enterprise level analysis and may be limited to a single high-level function, such as human resources, finance, operations, marketing, etc. This type of project corresponds to the managerial or administrative level of the managerial pyramid.

The third is the most detailed and can be considered as the application level which addresses the design of the user processing systems. This type of project has the narrowest scope and usually concentrates on a single user functional area. It is within this type of project that individual tasks are addressed. This type of project corresponds to the operational level of the managerial pyramid.

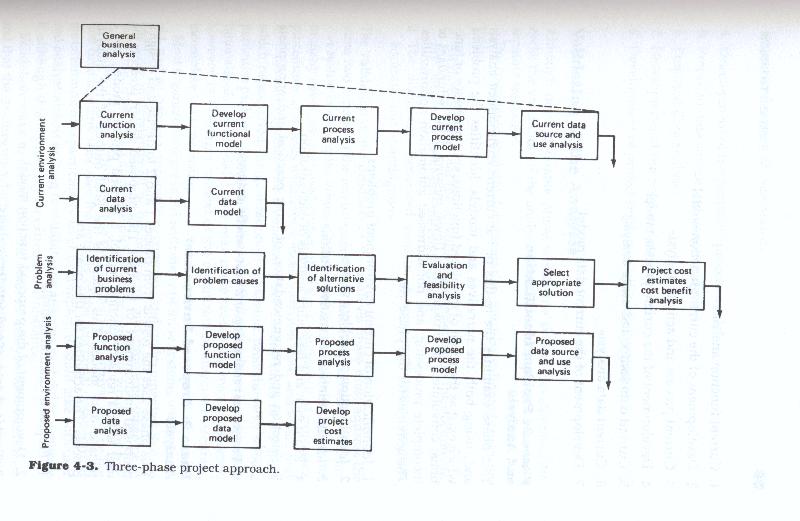

Regardless of the level being addressed or the methodology employed, each analytical project should follow the three-phase approach.

Three-Phase Approach to Analysis

Phase 1 – Current Environment Analysis

This phase looks at the current environment from a functional, process, and data perspective. The goal is to document the existing user functions, processes, activities, and data (Figure 4.3). The activities for this phase are:

Phase 2 – Problem Identification And Analysis

This phase examines the results of the current environment analysis and, using both the narratives and models, identifies the problems which exist as the result of misplaced functions; split processes or functions; convoluted, or broken, data flows; missing data; redundant or incomplete processing; and non-addressed automation opportunities. The activities for this phase are:

Phase 3 – Proposed Environment Analysis

This phase uses the results from phases 1 and 2 to devise and design a proposed environment for the user.This proposed design presents the user with a revised function model, a revised process model, and a revised data model. It is this phase which is the final output of the analysis process. The activities for this phase are:

Validation of the Analysis

Although not a definable phase within the system development life cycle, the validation of the analysis is important enough that it deserves separate treatment.

The completed analytic documentation must be validated to ensure that:

The validation of the products of the analysis phase must address the same two aspects of the environment: Data and the processing of data

A system is a complex whole.Old systems are not only complex, but in many cases, as illustrated in figure 4-2, they are a patchwork of processes, procedures and tasks which were assembled over time, and which may no longer fit together into a coherent whole. Many times needs arose which necessitated the generation of makeshift procedures to solve a particular problem.

Over time these makeshift procedures become institutionalized. Individually they may make sense, and may even work, however, in the larger context of the organization, they are inaccurate, incomplete and confusing.

Most organizations are faced with many of these systems which are so old, and so patched, incomplete, complex and undocumented that no one fully understands all of the intricacies and problems inherent in them, much less has a overview picture of the complete whole. The representation of the environment as portrayed by the analyst may be the first time that any user sees the entirety of the functional operations. If the environment is particularly large or complex, it could take both user and analyst almost as long to validate the analysis as it did to generate it, although this is probably extreme.

Validation seeks to ensure that the goals of analysis have been met, and that the documents which describe the component parts of the system are complete and accurate.It also attempts to ensure that for each major component, the identified sub-component parts recreate or are consistent with the whole.

To use an analogy, the process of system analysis and system design are similar to taking a broken appliance apart, repairing the defective part and putting it back together again.It is easy to take the appliance apart, somewhat more difficult to isolate the defective part and repair it, and most difficult to put all the pieces back together again so that it works.The latter is especially true when the schematic for the appliance missing, incomplete or worse inaccurate.

If during the repair process new, improved, or substitute parts or components are expected to replace some of the existing parts, or if some of the existing parts are being removed because they are no longer needed, the lack of proper documentation becomes a problem almost impossible to overcome.

The documentation created as a result of the analysis is similar to the creation of a schematic as you disassemble the appliance, the validation process is similar to making sure that all the pieces are accounted for on the schematic you created.However because your intent is to repair and improve, the documentation you create must not only describe where each component fits, but why it is there, what its function is, and what if any problems exist with the way it was originally engineered and constructed.

Validation must not only ensure that existing procedure is correctly documented, but also that to the extent possible, the reasons or rationale behind the procedures has also been documented.

The validation of the products of analysis seeks to ensure that the verifier sees what is there and not what should be there.

Systems analysis, by its very nature works to identify, define and describe the various component pieces of the system. Each activity and each investigation seeks to identify and describe a specific piece. The piece may be macro or micro, but it is nonetheless a piece. Although it is usually necessary to create overview models, these overview levels, at the enterprise and functional levels, seek only to create a framework or guidelines for the meat of the analysis, that which is focused in on the operational tasks.It is the detail at the operational levels which can be validated. The validation process of both data and process work at this level. Each activity, each output and each transaction, identified at the lowest levels, must be traced from its end point to its highest level of aggregation or to its point of origination.

In the analysis, the analyst is fact gathering, and seeking to put together a picture of the current environment.

During validation the analyst begins with an understanding of the environment and the pictures or models he has constructed. The aim here however is to determine:

It must be understood that the analysis documents represent a combination of both fact and opinion.They are also heavily subjective.They are based upon interview, observation and perception. Validation seeks to assure that perception and subjectivity have not distorted the facts.

The generation of diagrammatic models, at the functional, process and data levels, greatly facilitates the process of validation. Where these models have been drawn from the analytical information, and where they are supplemented by detailed narratives, the validation process may be reduced to two stages.

Stage one - Cross-referencing the diagrams to the narratives, to ensure that:

Stage two - Cross-referencing across the models. This includes ensuring that:

Walk-Throughs

Walk-throughs are one of the most effective methods for validation. In effect they are presentations of the analytical results to a group of people who were not party to the initial analysis. This group of people should be composed of representatives of all levels of the affected user areas as well as the analysts involved.The function of this group is to determine whether any points have been missed. In effect this is a review committee.

Since the analysis documentation should be self-explanatory and non-ambiguous, it should be readily understandable by any member of the group. The walk-through should be preceded by the group's members reading the documentation and noting any questions or areas which need clarification. The walk-through itself should take the form of a presentation by the analysts to the group, and should be followed up by question and answer periods. Any modifications or corrections required to the documents should be noted. If any areas have been missed, theanalyst may have to perform the needed interviews and a second presentation may be needed. The validation process should address the documentation and models developed from the top two levels - the strategic and the managerial - using the detail from the operational.

Each data item contained in each of these detail transactions should be traced through these models, end to end. That is, from its origination as a source document, through all of its transformations into output reports and stored files. Each data element, or group of data elements contained in each of these stored files and output reports, should be traced back to a single source document.

Each process, which handles an original document or transaction, should be traced to its end point.That is the process to process flows should be traced.

Input/Output Validation

This class of analytical validation begins with system inputs and traces all flows to the final outputs. This class also includes transaction analysis, data flow analysis, data source and use analysis, and data event analysis. For these methods each data input to the system is flowed to its final destination. Data transformations and manipulations are examined (using data flow diagrams) and outputs are documented. This type of analysis is usually left to right, in that the inputs are usually portrayed as coming in on the left and going out from the right.Data flow analysis techniques are used to depict the flow through successive levels of process decomposition, arriving ultimately at the unit task level.

Validation of these methods requires that the analyst and user work backward from the outputs to the inputs. To accomplish this, each output or storage item (data items which are stored in on-going files (also an output), is traced back through the documented transformations and processes to their ultimate source. Output to input validation does not require that all data inputs be used, however all output or stored data items used must have an ultimate input source, and should have a single input.

Input to output validation works in the reverse manner. Here, each input item is traced through its processes and transformations to the final output.

Validation of output to input, or input to output, analysis looks for multiply sourced data items, and data items acquired but not used.

Data Source to Use

This method of validation is approached at the data element level, and disregards the particular documents which carry the data. The rationale for this method is that data, although initially aggregated to documents tends to scatter, or fragment within the data flows of the firm. Conversely, once within the data flows of the firm, data tends to aggregate in different ways. That is, data is brought together into different collections, and from many different sources for various processing purposes.

Some data is used for reference purposes, and some generated as a result of various processing steps, and various transformations. The result is a web of data which can be mapped irrespective of the processing flows. The data flow models, and the data models from the data analysis phases are particularly useful here. The analyst must be sure to cross-reference and cross-validate the data model from the existing system.

A Professional's Guide to Systems Analysis, Second Edition

Written by Martin E. Modell

Copyright © 2007 Martin E. Modell

All rights reserved. Printed in the United States of America. Except as permitted under United States Copyright Act of 1976, no part of this publication may be reproduced or distributed in any form or by any means, or stored in a data base or retrieval system, without the prior written permission of the author.